How GPUs and LBM are revolutionizing CFD

The combination of GPUs and LBM is bringing CFD to a new era

GPUs and LBM are bringing CFD to a new era

GPUs and LBM are bringing CFD to a new eraThe last years we have seen an exponential increase in GPU performance and usage, bringing revolutions to a numbers of fields, as image processsing, data science and AI notably. Now this revolution is coming to CFD (computational fluid dynamics), and along we are seeing a change in the methods used for modeling and solving Navier Stokes, in order to take the most out of the GPUs massive parallelization, with LBM being an outstanding suit for that.

With the advancement of numerical models and algorithms, with AeroSim Solver we’re capable of running a simulation with +150M nodes using a GPU with 24GB, in no more than 24h, allowing for us to run cases that once were only possible in a cluster, now in our own home desktop. How and why the combination of GPUs with LBM allows this is what we will explain.

Performance is key for CFD

CFD is an area that has always evolved along with the computers revolutions. The first solvers were developed and runned in mainframes, used by governs and big companies to run simple cases, 2D and laminar. But with the increase of computers’ capacities, so did the CFD capabilities, having more nodes, more complex models and being able to be used in more and more applications as its capabilities grow.

Example of a mainframe

From time to time there are revolutions in computing that allow for order of magnitude gains for CFD. One example of that is the creation of multicore processors in the 2000s, that allowed for the parallelization of simulations and a great increase on performance.

Now there is another oportunity for performance gains, even greater, with the use of GPUs and all its advances in the last years. But along with this, modeling and algorithms changes are required, and to understand what kind of changes needs to be done, we must first comprehend how GPUs work and why they are so fast.

Why are GPUs so fast

In the 2000s, the processors industry reached the Power Wall, in which we couldn’t increase the processors clock frequency anymore due to heat constraints. From then on, the industry developed another ways of increasing the processors performance, and the main one was the multicore approach, in which instead of increasing the number of instructions executed by core, the number of cores was increased and the programs parallelized.

That dictated how the performance would increase onwards: more and more parallelization. And the GPU is the peak of that.

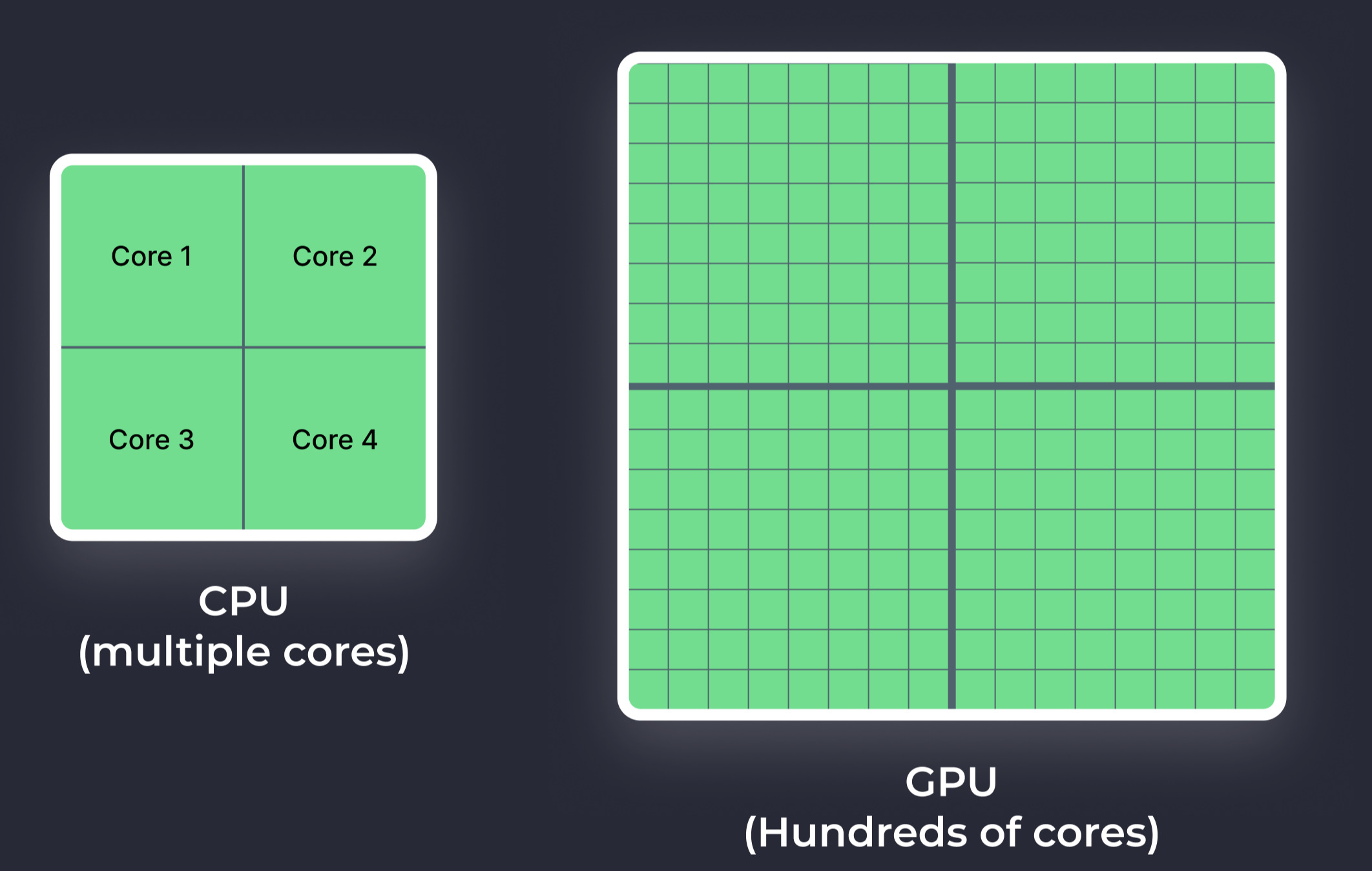

A GPU is a massivelly parallel processor. It has not 2, 10 or 50 cores, but thousands of it, and is capable of performing many more operations at the same time than the CPU is. But this comes with a cost.

While CPUs are capable of performing and switching tasks very easily, with a very low latency, a GPU executes a set of tasks with a specific characteristic: the same instructions must be executed for a great amount of data. This means that the GPUs are good to execute code inside a parallizable for loop, but very bad for anything else.

Not only this, but the volume of operations must be great enough to hide the latency of operations in GPUs, because for it to have that many cores, they cannot be as powerful as a CPU core is, so latency and throughput of a single core is lower than a CPU core. But when you combine all cores, the performance is much times greater.

Difference of cores between CPU and GPU

So in order to have our CFD softwares to get the most out of GPUs, we must make the algorithm so that it is fully parallizable, and one of the most important property for this is locality, which means that the algorithm depends only on local and neighbour data to run.

For example, if the average density of the full domain is required for an algorithm, it doesn’t have a good locality. If a series of nodes depends on one another to be solved, and must be solved sequentially for that, it is also a bad sign for its locality. This is why LBM has becoming a popular choice for GPUs, as it has this locality at its core.

LBM is the best fit for GPUs

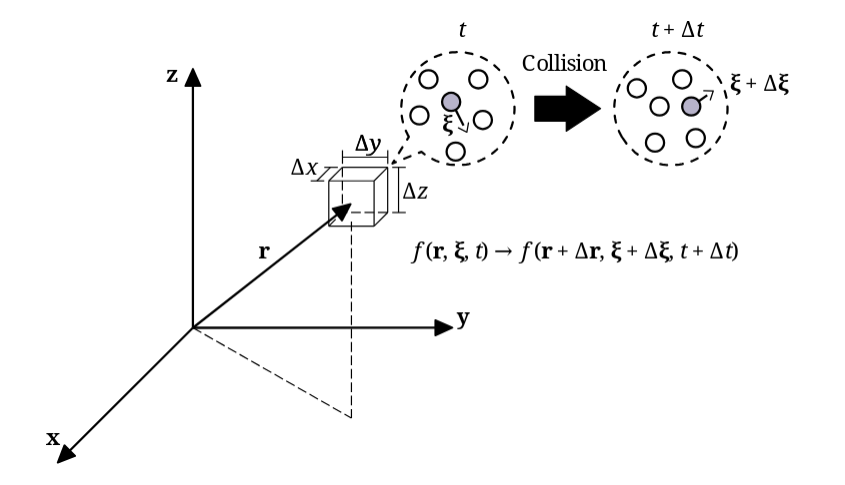

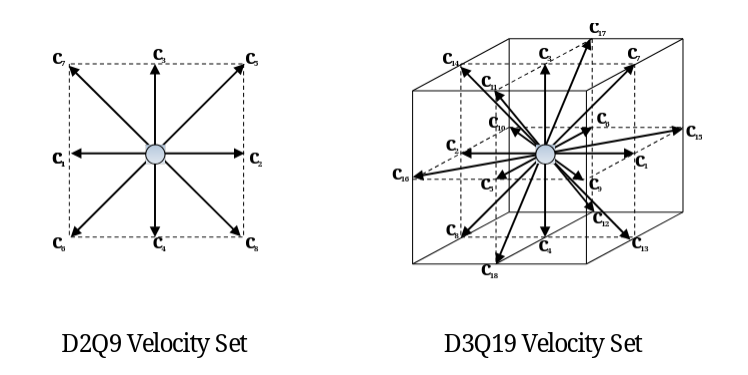

The LBM (lattice Boltzmann method) is a relatively recent approach to solve numerically Navier Stokes, in an indirect manner. The method is based on the Boltzmann equation, with a mesoscopic approach based on populations (particles distributions) streaming and colliding, and the macroscopics values (as velocity and density) as functions of these populations.

LBM collision and streaming process

Despite the numerical formulation of the method being very complex and indirect, its bulk implementation can be resumed in two operations: collision, when particles collide in a lattice, and streaming, when particles travel to neighbour lattices. So the main LBM algorithm is highly local, requiring only data of one lattice, and its neighbours for streaming.

Another importante locality characteristic is that the macroscopics values of a lattice can be calculated using only the populations of a node, even for finite difference macroscopics as stress. So density, pressure, velocity, temperature and stress use only local information to be calculated, avoiding finite difference methods.

LBM velocities set

LBM challenges

But since the method is relatively new, there were much to be done in the modeling and algorithm area to make it feasible for industrial applications, and AeroSim along with its academic partners have helped bridge that gap in the last few years.

Some of the challenges that we encoutered are: numerical instability, which required the use of advanced collision models along with LES models; domain refinement, which required novel numerical and algorithmic developments; memory usage, which is a great bottleneck in LBM and the new macroscopics representation allowed to surpass it, with more than 50% gain in memory; among many other challenges.

With all of this, we feel that the time for LBM and GPUs has come to revolutionize CFD. And you can check the results and numbers by yourself in our portfolio, with over 90 simulation comparisons against wind tunnel data, and AeroSim Solver, which is web based.

AeroSim is bringing the CFD to a new era, making possible what once was unthinkable. Democratizing the CFD so that everyone can run simulations with 100M of nodes in their home computer, not in a week, but in a day.

Summary

GPUs along with LBM are revolutionizing CFD, just as they have in AI and data science. With LBM natural suit for massive parallelization, and GPUs performance, we are seeing unprecedent levels of performance and scalability in CFD. At AeroSim, we have overcome numerical challenges that stood in the way for years, as numerical stability, domain refinement, and memory efficiency, enabling simulations with over 200M nodes on a single GPU.

Waine is a computer engineer, with over 7 years of hands-on experience in numerical simulations for Computational Fluid Dynamics (CFD) using the Lattice Boltzmann Method (LBM)